欢迎在文章下方评论,建议用电脑看

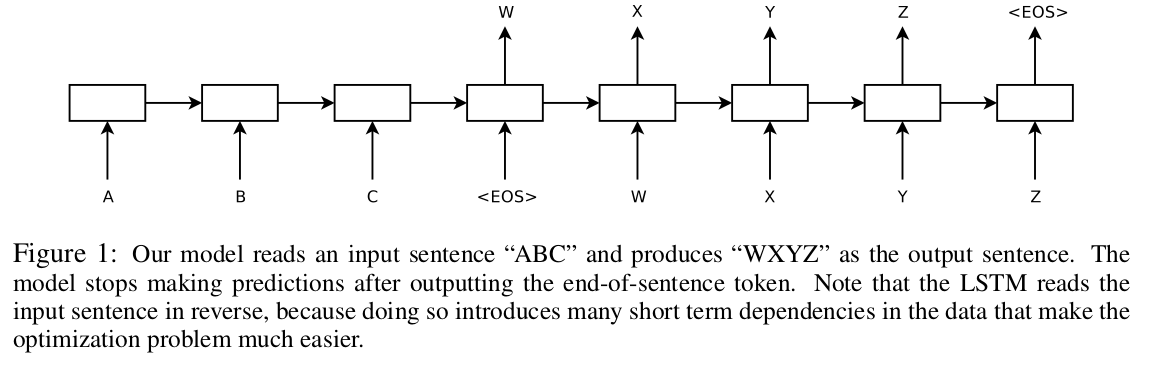

Long Short-Term Memory (LSTM) architecture [16] can solve general sequence to sequence problems.

Despite their flexibility and power, DNNs can only be applied to problems whose inputs and targets can be sensibly encoded with vectors of fixed dimensionality. It is a significant limitation, since many important problems are best expressed with sequences whose lengths are not known a-priori. For example, speech recognition and machine translation are sequential problems. Likewise, question answering can also be seen as mapping a sequence of words representing the question to a sequence of words representing the answer. It is therefore clear that a domain-independent method that learns to map sequences to sequences would be useful

a trick:the LSTM did not suffer on very long sentences,The simple trick of reversing the words in the source sentence is one of the key technical contributions of this work